Recent developments in communications, computational capability, and data availability all lend themselves to a high level of intelligence both on the battlefield and within the utility. The proposed benefits of the "smart grid" align well with recent developments in data integration, mining, and fusion.

Data Management and Smart Grids

Bert Taube | Versant Corporation

1. What does the notion of Big Data stand for? Why do the characteristics of Big Data apply to the smart grid problem?

“Big Data,” as defined by Doug Laney in 2001, is a three-dimensional space of orthogonal variables of volume, velocity, and variety. Laney was operating in a paradigm of a typical business, such as manufacturing, where profitability is often achieved by the minimization of fixed assets, work in progress (WIP) is measured in days, weeks, or months, and real-time data collection and analysis are often not critical to ensure the profitability of the organization. The value chain for manufacturing almost always crosses company boundaries. However, in the utility industry, there are vertically integrated and deregulated variants that have to act exactly the same.

The utility industry is unique in that the product is consumed simultaneously to its production (but the price may be set years in advance) and the focus is on the utilization of assets (which are often defined by circumstances) rather than the minimization of assets. In this environment, the acquisition of real-time data can be costly and can seriously impact the bottom line. The utility industry must still deal with volume, velocity, and variety as well as two new “V’s” – validity and veracity.

The utility industry is unique in that the product is consumed simultaneously to its production (but the price may be set years in advance) and the focus is on the utilization of assets (which are often defined by circumstances) rather than the minimization of assets. In this environment, the acquisition of real-time data can be costly and can seriously impact the bottom line. The utility industry must still deal with volume, velocity, and variety as well as two new “V’s” – validity and veracity.

Validity is adding a fourth dimension to Laney’s model, where time is considered. Information in the utility environment often has a “shelf life” and therefore only needs to be stored for a fixed amount of time. After that time, the data may no longer be needed for evaluation. The questions of when to archive or dispose of data become relevant given the cost of storing large quantities of data.

Veracity is the recognition that data is not perfect and that achieving “perfect” data has a cost associated with it. The questions become 1) how good must the data be to achieve the necessary level of analysis and 2) at what point does the cost of correcting the data exceed the benefit of obtaining it?

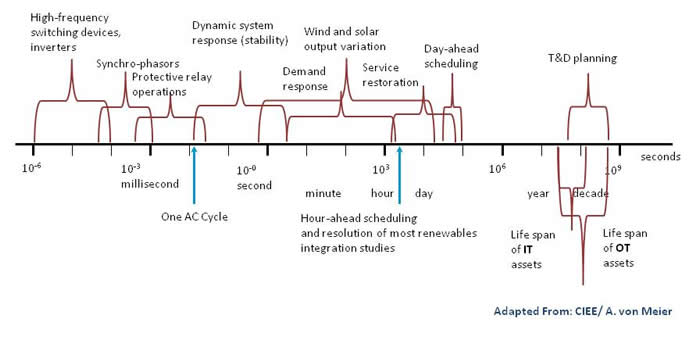

The following figure illustrates the unique situation of the utility industry; where data time scales vary over 15 orders of magnitude. Relational database and time-serialized databases may not have the capability to capture the causal effects of years or decades of events that may occur in a millisecond or microsecond range.

Figure – Uniqueness of data in the electrical utility sector.

Analyzing huge volumes of data that spans multiple orders of magnitude in time scale is a serious challenge for current data-management technologies.

2. Is the type of data management approach important to manage Big Data collected from a smart grid and if so why?

The relational database, the most prevalent in the utility industry, was developed by Edgar Codd in 1970. Codd developed the concept of the table to store data of similar information using keys between tables, inadvertently developing relationships between the tables. The method, known as normalization, helps reduce the amount of data repeated in multiple tables. Optimizing the data to reduce the size of the database through normalization does not facilitate the retrieval of data nor does it help with the analysis of the data. Instead, the natural relationship between data elements is often lost through normalization and the creation of queries to return related data located in different tables becomes a tortuous process. Standard SQL queries are based on CPU and memory-expensive JOINS between tables and require skill and experience to write. This type of database technology does not work particularly well with modern, object-oriented languages. The structures of the commands and messages must be broken apart and translated in the SQL-based commands that the relational database typically uses. This mismatch of structure wastes valuable time when inserting, changing, or retrieving data.

Another common type of data is time-serialized databases, such as historians, that are commonly used to gather streaming data from sources such as supervisory control and data acquisition (SCADA). This technology typically stores data, with a timestamp and an indication of data quality, only when it changes. This data-management technology is for handling large amounts of data where input/output (I/O) speeds and available storage are the limiting factor.

As the cost of storage continues to plummet and the bandwidth and I/O speeds of networks and servers continue to increase, it makes less sense to utilize these types of data-management technologies.

According to Jeffrey Taft, Paul de Martini, and Leonardo von Prellwitz [“Utility Data Management & Intelligence”, Cisco White Paper, May 2012], utility data for advanced data management and analytics can be summarized as follows:

|

Data Type |

Description |

Key Characteristics |

|---|---|---|

|

Telemetry |

Measurements made repetitively on power grid variables and equipment operating parameters; some of this data is used by SCADA systems. |

Constant volume flow rates when the data-collection technique is polling; standard SCADA polling cycles are about 4 seconds, but the trend is to go faster; telemetry can involve a very large number of sensing points. Telemetry data usually comes in small packets (perhaps 1500 bytes or so).

|

|

Oscillography |

Sample data from voltage and current waveforms. |

Typically available in bursts or as files stored in the grid device, captured due to a triggering event; transferred on demand for use in various kinds of analyses. For some kinds of sensing systems, waveform data is acquired continuously and is consumed at or near the sensing point to generate characterization values that may be used locally or reported out (e.g., converting waveform samples to RMS voltage or current values periodically); waveform sampling may be at very high rates from some devices such as power quality monitors.

|

|

Usage Data |

Typically meter data, although metering can occur in many forms beside residential usage meters; typically captured by time-integrating demand measurements combined with voltage to calculate real power. |

May be acquired on time periods ranging from seconds to 30 days or more; residential metering may store data taken as often as 15 minutes, to be reported out of the meter one to three times per day.

|

|

Asynchronous Event Messages |

May be generated by any grid device that has embedded processing capability; typically event messages generated in response to some physical event; this category also includes commands generated by grid-control systems and communicated to grid devices; may also be a response to an asynchronous business process, e.g., a meter ping or meter voltage read. |

For this class, burst behavior is a key factor; depending on the nature of the devices, the communication network may be required to handle peak bursts that are up to three orders of magnitude larger than base rates for the same devices; also, because many grid devices will typically react to the same physical event, bursting can easily become flooding as well. |

|

Meta-data |

Data that is necessary to interpret other grid data or to manage grid devices and systems or grid data. |

Meta-data includes power grid connectivity, network and device management data, point lists, sensor calibration data, and a rather wide variety of special information, including element names, which may have high multiplicity. |

Telemetry and oscillography are often stored in time-serialized database while usage data, asynchronous event messages, and meta-data are often stored in relational databases. Meta-data, such as connectivity, is stored as binary large objects (BLOBS) in products such as a geospatial information system (GIS), usage data is stored in a meter data-management system (MDMS), and asynchronous messages are stored in a variety of places, one of which is a distribution management system (DMS).

The dilemma is that neither of the prevailing data-management technologies are an ideal way to store, manage, and analyze these data types. This is especially true when one is attempting to analyze across data types.

The utility industry has come to a point where the data-management technology of the past no longer fits the needs of the industry. This comes just at a time when the amounts of data produced are about to increase significantly. What is needed is a data-management technology that is optimized for analysis rather than constraints, such as space and speed. Ideally, this database technology would be built much like the grid itself, with groups of assets that have a natural relationship between the classes.

Object-Oriented Database Technology

One type of database that has been around for two decades but has had less penetration in the utility industry is the object-oriented database. This type of database is used in the telecommunications industry and the airline industry to track large number of objects. Unlike relational (SQL) or serialized databases, object-oriented databases offer seamless integration with object-oriented languages. Unlike SQL—which encompasses its own database language apart from the programming language—the object database uses the object-oriented programming language as its data-definition language (DDL) and data-manipulation language (DML). The application objects are the database objects. Query is used for optimization based on used cases, not as the sole means of accessing and manipulating the underlying data. There is no application code needed to manage the connectivity between objects or how they are mapped to the underlying database storage. Object databases use and store object identity directly, bypassing the need for the CPU and memory-expensive set based JOIN operations using SQL. Object databases exhibit traditional database features, such as queries, transaction handling, backup, and recovery, along with advanced features such as distribution and fault tolerance.

Leveraging International Standards in New Ways

In electric power generation, transmission, and distribution, the common information model (CIM) is a standard developed by the electric power industry to fully describe the assets, topology, and processes that make up the grid. The CIM is a set of standards, adopted by the International Electrotechnical Commission (IEC), whose original purpose was to allow application software to exchange information about the configuration and status of the grid.

The CIM is described as a UML model. The central package of the CIM is called the “wires model,” which describes the basic components used to transport electricity. The standard that defines the core packages of the CIM is IEC 61970-301. It focuses on the needs for electricity transmission, where related applications include energy-management system, SCADA, planning, and optimization. The IEC 61968 series of standards extend the CIM to meet the needs of electrical distribution, where related applications include: distribution-management system, outage-management system, planning, metering, work management, geographic information system, asset management, customer-information systems, and enterprise resource planning.

The CIM UML model, which describes information used by the utility, is an ideal candidate on which to base an object-oriented database. It has several advantages:

- The schema, derived from the standard, would be public and well documented.

- The UML relationships would have been vetted through the standard development process.

- Messages based on the UML model are, in themselves, standards.

It is believed that additional insight into the inner workings of the grid will allow for better, faster, more insightful and more widely useful analytics. This will be done by developing a schema in an object-oriented database, based on the CIM that describes the relationship between actual object classes in the utility.

3. Who benefits the most from state of the art data management from the smart grid and why?

NoSQL data management and analytics solutions provide a quantum leap in the effort of the electricity sector. NoSQL has to improve the ability of electric power grid operators to detect and react to system faults that would otherwise lead to power disruptions. Versant’s integrated Big Data management and analytics development solution accommodates options to quickly identify precursors to impending disruptions of the grid by using a wide variety of data sources and state-of-the-art analytic methods. Unlike many industries, power delivery is notoriously flexible, with daily, weekly and annual variations due to variability in customer load, generation dispatch, delivery system outages and other reasons.

This variability has challenged the industry in discerning patterns that can be used to identify off-normal conditions. NoSQL state-of-the-art data management technology provided by Versant can address these challenges unlike any other data management techniques available today. It takes advantage of the vast amount of streaming data available to grid operators. The technology can rapidly and reliably screen and analyze to detect faulty data and correct for missing elements in real time.

Also, to take advantage of increased data concurrency, multi-level techniques for real-time data processing need to be designed. This will provide a “bottom-up” aggregation of data from the measurement level to the utility-operator level. It will ensure not only to minimize the bandwidth requirements for the communication channel, but also provide a faster and finer-grained level of local grid monitoring and control. It will ensure that the most urgent alerts and messages reach operators in real-time. Versant’s object-oriented database technique is perfectly well suited as a platform for the necessary infrastructure to create proper situational awareness in real-time.

4. What are the privacy and security issues and regulations that are important to examine when deploying data management solutions?

The electric grid is a “system of systems,” managed by thousands of people, computers and manual controls, with data supplied by tens of thousands of sensors connected by a wide variety of communications networks. Over the next 20 years, the growth in percentage terms of data flowing through grid communications networks will far exceed the growth of electricity flowing through the grid. Many advances such as integration of variable energy resources to wide-area situational awareness and real-time control to demand response, result from or depend on this increase in data collection and communications.

Critical challenges will arise from the expansion of existing communications flows and the introduction of new ones. While the increase in data communications will bring significant benefits, it also will give rise to new costs and challenges. Beyond the direct costs of hardware, software, networks, and staff, significant additional costs may arise from the improper or illegal use of data and communications. Unfortunately, these costs are difficult to quantify and can only be discussed in terms of probabilities and estimates of potential impacts to businesses and consumers, complicating the cost–benefit analysis of spending to protect communications systems. In addition, the highly interconnected grid communications networks of the future will have vulnerabilities that may not be present in today’s grid.

Millions of new communicating electronic devices, from automated meters to synchrophasors, will introduce attack vectors. These paths can lead attackers to gain access to computer systems or other communicating equipment which can increase the risk of intentional and accidental communications disruptions.

Cyber security refers to all the approaches taken to protect data, systems, and networks from deliberate attack as well as accidental compromise, ranging from preparedness to recovery. Increased data communications throughout the electric grid will introduce new cyber security risks and challenges, to both local and wide-scale grid systems.

Existing point-to-point and one-way communications networks will need to be expanded or replaced with networks designed for two-way communications. Decisions to standardize on specific protocols require input from a wide range of industry stakeholders, and federal agencies, which play an important convening role. An issue, however, is whether to base future grid communications on utility-owned private networks whether they are facilities operated or leased from telecommunications companies.

A related regulatory issue is the allocation of spectrum for utility communications. It will take a determined cyber security review of the design and implementation of grid components and operational processes to reduce the likelihood of attack and the scope of potential impact. Making the grid impenetrable to cyber events is impossible due to rapidly expanding connectivity and evolving threats. But one thing to consider about the current CIP standards is whether they focus too much on reporting and documentation rather than substantial cyber security improvements; compliance with standards does not necessarily make the grid secure.

Information privacy and security is an issue similar to cyber security. The future electric grid will collect, communicate and store detailed operational data from tens of thousands of sensors as well as electricity-usage from millions of consumers. This implies a number of issues that can arise from making this data available to people who need them and protecting it from those who do not. Key questions that are being addressed by the industry and regulators include (1) what data are we concerned about? (2) how do we determine who should access that data, when, and how? (3) how do we ensure that data is appropriately controlled and protected? (4) how do we balance privacy concerns with the business or societal benefit of making data available?

While privacy discussions in the press focus on consumer electric usage data, control over grid operational information is arguably more important. Information privacy concerns are not limited to one type of organization or one type of use. Regulating fundamental privacy principles now will ensure that data collection and storage systems do not have to be redesigned in the future and will help minimize the privacy challenges that may obstruct future grid projects. An appropriate function of the regulatory process is to balance the value of data collection with other concerns. Electricity customers should have significant control over access to data about their electricity usage, both to supply third-party services they consider valuable and to restrict other usage that they consider detrimental.

The ability of the utility industry to incorporate technological developments in electric grid systems and components on an ongoing basis will be critical to mitigating the data communications and cyber security challenges associated with grid modernization. Development and selection processes for interoperability standards must strike a balance between allowing more rapid adoption of new technologies (early standardization) and enabling continuous innovation (late standardization).

As communications systems expand into every facet of grid control and operations, their complexity and continuous evolution will preclude protection from cyber attacks. Response recovery and protection are important concerns for cyber security processes and regulations. Research funding will be important to the development of best practices for response to and recovery from cyber attacks.

There is currently no national authority for overall grid cyber security preparedness. The Federal Energy Regulatory Commission (FERC) and North American Electric Reliability Corporation (NERC) have authority over the development of cyber security standards and compliance for the bulk power systems, but there is no national regulatory oversight of cyber security standards compliance for the distribution system.

Maintaining appropriate control over electricity usage and related data will remain an important issue with residential and commercial consumers. Privacy concerns must be addressed to ensure the success of grid enhancement and expansion projects and the willingness of electricity consumers to be partners in these efforts. The National Institute of Standards and Technology (NIST) and State Public Utility Commissions (PUCs) should continue to work with industry’s organic standardization processes to foster the adoption of interoperability standards. Congress should clarify FERC’s role in adopting NIST-recommended standards as specified in the Energy Independence and Security Act of 2007 to ensure a smoothly functioning industry-government partnerships.

The federal government should designate a single agency to have responsibility for working with industry and to have appropriate regulatory authority to enhance cyber security preparedness, response and recovery across the electric power sector, including both bulk power and distribution systems.

PUCs, in partnership with appropriate federal agencies, utilities, and consumer organizations, should focus on coordinating their activities to establish consistent privacy policies and process standards relating to consumer energy usage data as well as other data of importance to the operation of the future electric grid.

Privacy, security and regulations for the utility industry are a pretty broad topic. The “two poles” of this planet are 1) customer privacy, security and regulation and 2) grid privacy, security and regulation. Just like our north and south poles they are quite different environments with the grid being much more sterile than the complex issues of customer privacy and security.

5. Are there lessons that utilities can learn from other industries in terms of data management solutions related to Big Data? If so which would be good examples?

The power-delivery segment of the electric utility industry has been historically challenged in applying techniques developed in other industries to their line of business. Techniques like just-in-time inventory methods fail during large weather events, and maximizing utilization of assets fail to take into account the large swing in daily and seasonal loadings. However, there is one area that aligns well with the segment: the military methods applied to the battle field. The scale and diversity of assets, land area involved, and deployment of human assets are all similar in their functions. Recent developments in communications, computational capability, and data availability all lend themselves to a high level of intelligence both on the battlefield and within the utility. The proposed benefits of the “smart grid” align well with recent developments in data integration, mining, and fusion.

Unlike process facilities—such as oil refineries, paper pulp mills, and generation stations that have relatively fixed modes of operation that easily lend themselves to more commercialized pattern-recognition methods—power delivery has daily, weekly and annual variations due to customer load variability, generation dispatch, delivery system outages and other reasons. This variability has challenged the industry in coming up with repeatable patterns. Recent work by EPRI along with Southern Company and other utilities developed the concept of “similar day,” where the current system conditions can be compared to previous days with a similar construct.

While this concept is still in its infancy, the concept is sound and can be further developed into an automated process. The development of “similar days” can provide a suitable data stream for subsequent analytics such as data mining and fusion. Versant’s integrated Big Data management and analytics framework provides a perfect platform for the deployment of this approach.

6. Could you provide more details about some research projects with EPRI to help utilities address the Big Data problem?

Versant’s solutions already manage many of the global data infrastructures that make power grids more intelligent, including several of the largest telecommunication networks around the world. As utilities have begun to encounter similar data management challenges of speed, reliability, effectiveness and accessibility by deploying smart grids, Versant’s solutions have already been implemented by transmission system operators in the EMEA region.

7. How quickly do you think we will see real world smart grid solutions roll out?

Today most utilities are in smart grid deployment mode, particularly the investor-owned utilities (IOUs). The Public Utilities Commissions have established smart grid strategic plans for all IOUs nationwide. At this point, many utilities have entered the implementation phase. The roll-out of smart grid solutions has been scheduled for the next couple of years as significant investments need to be made. By 2020, we should see a large number of real world smart grid deployments in place.

Bert Taube

Bert Taube is the Director of Business Development at Versant. He holds a Ph.D. in electrical engineering from the University of Rostock and an MBA from the Kellogg School of Management at Northwestern University.

The content & opinions in this article are the author’s and do not necessarily represent the views of AltEnergyMag

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product